Data annotation is a critical component of any AI project, particularly in the field of computer vision, where precise labeling of images or video data directly impacts the performance of machine learning models. Without well-marked data, the results can be unsatisfactory, leading to poor model accuracy, unreliable predictions, and ultimately, ineffective outcomes.

In our experience, clients often try to negotiate or minimize this phase of the project, seeking to reduce upfront costs. However, this approach almost always results in higher expenses later on, especially during the model training stage. Insufficient or inaccurate data annotation leads to longer training times, increased iteration cycles, and even the need for re-annotation, which significantly drives up costs. Proper data annotation is an investment that ensures smooth model development and robust outcomes, particularly in complex computer vision projects, where precision is paramount.

Developing your own computer vision application that can be trained to recognize, classify or identify the image content requires good quality data. Regardless if you need to train the AI model or just apply image processing algorithms, a dataset consisting of both images and precise information on their content is crucial for development and evaluation of the designed solution.

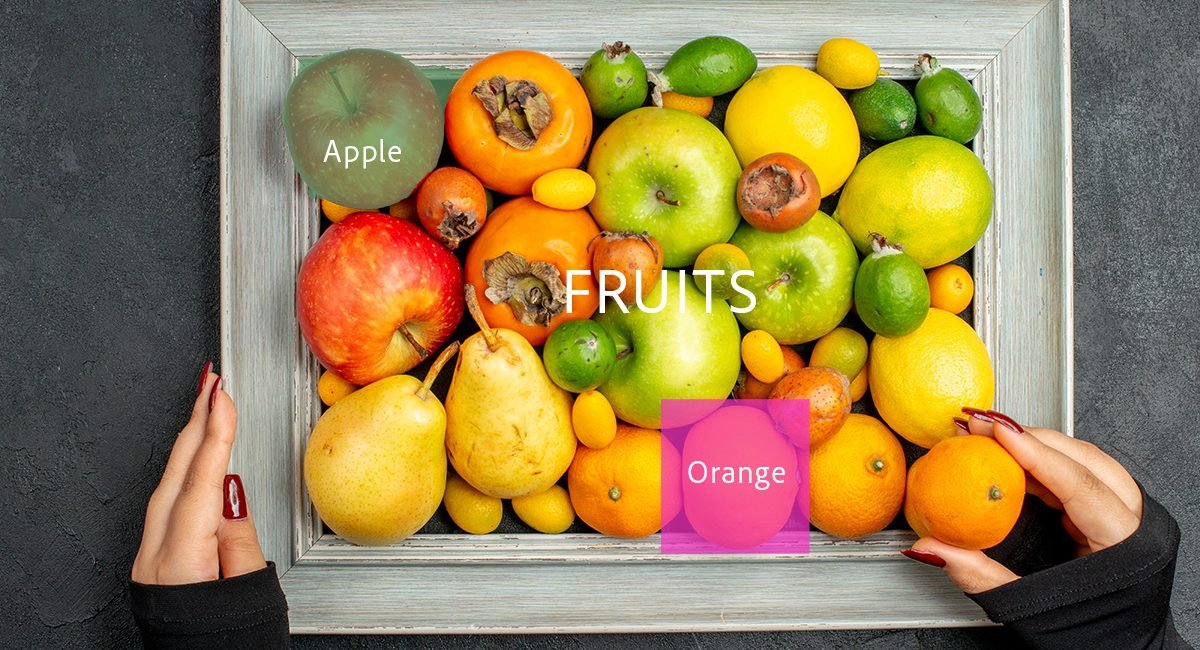

The process of enhancing the images with additional information on their content is called image annotation. The information is typically delivered by the domain specialists, who are educated to describe the content according to the purpose of the developed application. As computer vision applications spread over a variety of industries and use cases, the required information can be as simple as indicating whether it is day or night scene in the image or as complex as determining the anomalies in an X-ray snapshot.

Machine learning algorithms utilize annotated data for training. During the training phase they optimize their parameters to provide the outcome consistent with human annotation. The scope of annotation depends on the target application. For example, an algorithm developed to recognize people in the view can be trained on images with people marked in the images as a group. But if one needs to count the number of people in the image, the algorithm should be able recognize each individual separately, hence the annotation should provide such information as well. There are industry standards of annotation techniques that have been developed and used for specific applications.

In this article, we will focus on the different types of labels used for images and assess when to use them, depending on the objectives of the project. Whether the goal is object detection, segmentation, or classification, selecting the right annotation type is crucial for optimizing the model’s performance and achieving the desired results efficiently.

Image classification is best used in cases where images need to be automatically organized, labeled, or segmented according to their content. It is particularly valuable in use cases like:

The first layer of information on the image is a general, predefined category, that can be assigned according to its content. Examples include day/night categories, landscape categories (seaside, mountains, city) or faulty/correct products. The list of categories and subcategories is called taxonomy. BoBox experts can classify images and objects within images based on desired taxonomy, including features of residential real estate, land, type of buildings, or, for example, aerial maps. By converting image data into a structured format, at BoBox we enable our clients to leverage AI and machine learning models for predictive analysis, automated decision-making, and other advanced applications.

Bounding box annotation is a technique for labeling objects within an image or video by drawing rectangular boxes around them. Such visual annotation is then typically transformed and stored as a set of vertices, representing the object’s spatial location. Bounding boxes help determine the location of objects in the image as well as identify the type of object. While this type of annotation may introduce inaccuracies, especially if the annotated objects have irregular shapes, it is sufficient for many tasks such as object detection, which results in an output of the same form—a bounding rectangle.

Bounding box annotations are widely used in various fields, particularly when quick and approximate object localization is needed. Some common use cases include:

Polygon annotation provides a higher level of detail in object annotation compared to rectangular bounding boxes, offering a more accurate and customized fit to the object’s shape. This technique involves outlining the object using a series of vertices, creating a polygon that captures the exact edges of irregular or non-rectangular objects. Each vertex marks a specific point on the object’s boundary, allowing the annotation to follow complex contours that bounding boxes might miss. Polygon annotations are essential when precision is critical, such as when dealing with intricate shapes or when the object’s boundaries are not linear or rectangular. This type of annotation is especially important in scenarios where accurate segmentation is required, as it ensures that the entire shape of the object is recognized and processed by AI and machine learning models. By providing a more granular understanding of an object’s form, polygon annotations improve the quality of model training and enhance the performance of applications that require detailed object recognition, segmentation, or localization.

Polygon annotation is particularly useful in cases where a detailed and precise object outline is essential. Common use cases include:

For specific use cases, such as facial recognition or emotion detection, keypoint annotation is often the most suitable annotation technique. This method involves marking individual points of interest on an image, such as the corners of the eyes, the tip of the nose, and the edges of the mouth. These keypoints can then be connected to form a more comprehensive representation of facial features, allowing for detailed analysis of expressions and emotions. By providing precise coordinates of these critical points, keypoint annotation enables algorithms to understand and interpret complex facial dynamics, facilitating applications in security, user interaction, and behavioral analysis.

Additionally, this technique can be utilized in various contexts, including:

The BoBox team is here to support your data preparation needs. The use cases described above illustrate the diverse range of annotation tasks that you can delegate to our team of experts. Whether your specific goals lie in image analytics, computer vision, or any other AI-driven initiatives, you can rely on our extensive experience and proven credibility in delivering high-quality datasets swiftly. Our commitment to excellence ensures that the datasets we provide will effectively fuel your AI models, empowering your projects and enhancing their performance in real-world applications. Let us help you turn your data into actionable insights, enabling you to achieve your objectives with confidence.