Computer vision algorithms are fed with visual data with additional layers of information typically provided by human annotators. This layer holds information on the content of the image like shapes or objects in the scene, as well as their position, type or attributes. There are several ways to provide such information for an AI model to be trained and evaluated in terms of its intended use.

The most popular are the rectangular envelopes for objects in the image, so called bounding boxes. They are simple and quick to draw, but depending on the annotated object features such rectangular envelopes may also cover a significant portion of the background. The object detection algorithms trained on such annotations also return their predictions in the same form. Such output turns out to be sufficient in many real-life use cases, for example object counting.

However some computer vision tasks require more detailed information, like the exact shapes of the detected objects. For this purpose the algorithms need to be trained on more detailed information. Polygon annotations are used to provide precise information on the object’s envelope, which in this case can be an arbitrary polygon. Such annotation is definitely more costly, however provides enough flexibility to define even complex shapes.

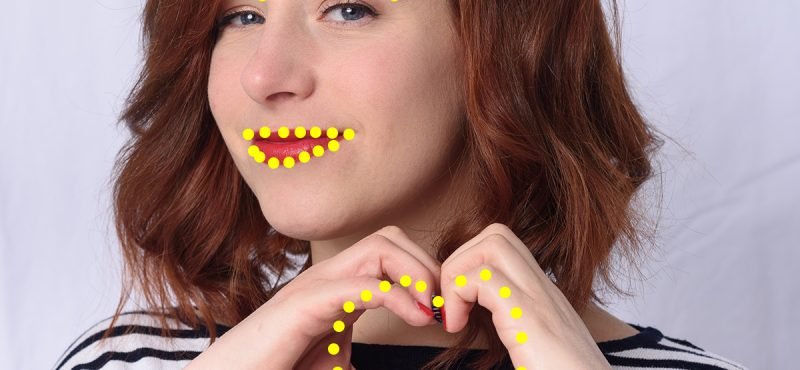

Unlike the rectangular bounding boxes or polygon annotations, key points allow the algorithms to recognize not only the objects in the scene, but also their pose, key features and their mutual positions. This kind of information becomes crucial in some important computer vision applications, including pose detection, emotion detection or face recognition. Keypoints are defined as pinpoints of the key features of the object. For example, a human face is characterized by eyes, nose, mouth, eyebrows etc. Their mutual position, distances and individual shapes allow among others for face reidentification and emotion detection. The position of pinpoints like joints in the human silhouette allow to determine the pose of the person and prediction of his/her moves. In many cases, particular key points can be naturally grouped into larger collections by introducing additional connections between them (like bones connecting joints in a human body). Such a collection of keypoints is called a skeleton. Both keypoints and skeletons are used to train AI models able to detect object pose, movement, recognize activity or assess the object features for compliance with the standard.

Keypoint annotation plays a vital role in many computer vision applications. See the following examples where keypoint annotation is a crucial component:

Preparation of a good quality training dataset involves manual annotation of all defined keypoints (or skeletons) in the image. Human annotators need not only recognize the object in the image, but also all of its key points and their connections. It is often difficult to indicate all key points due to the variety of orientations, occlusions and partial views of the objects. This is why the clear annotation rules, edge case decisions and exceptions should be well documented in the annotation guidelines. The complexity of annotation poses significant risk of inconsistency and errors, therefore a regular quality control should be included as a part of the annotation process. And last but not least, as the detailed annotation is also extremely costly, you may want to use a hybrid approach to support human annotators with automated pre-annotation techniques. Our experts can help you in every stage of the annotation process to deliver best quality datasets.