In the face of rising transportation demands and driver shortages, 3D point cloud annotation for autonomous vehicles is becoming a cornerstone of future mobility. From commercial fleets to public transit, the ability of AVs to perceive and react to their environment depends on detailed, labeled spatial data. In the face of these challenges, the future of autonomous vehicles takes on special importance – one could even say that it is becoming critical to maintaining the continuity of transportation services.

Autonomous cabs are already carrying thousands of passengers in California, and similar solutions are being tested and implemented on a large scale in China. But these are not only stories from overseas, ambitious initiatives are also being undertaken in Europe, as evidenced by Blees, a Polish company developing autonomous buses for use in urban spaces.

Contrary to popular belief, the implementation of artificial intelligence in transportation does not mean massive job losses. On the contrary, in a situation where there is a shortage of drivers, AI may prove to be not so much a competitor as a lifesaver for the entire transportation industry.

Perception of the spatial environment around an autonomous vehicle is achieved by combining data from various on-board sensors, including cameras, LiDAR (Light Detection and Ranging), radar, inertial measurement units (IMUs) such as accelerometers and gyroscopes, and GPS receivers. Each of these sensors provides complementary information: cameras capture high-resolution visual data to detect colors, textures and lane markings; LiDAR generates dense 3D point clouds that represent the shape and distance of surrounding objects; radar is effective in detecting objects in adverse weather conditions and measuring relative speed; GPS and IMUs provide real-time vehicle location and orientation in space.

By combining (or fusing) these sensor data, autonomous systems create a comprehensive 3D model of the vehicle’s environment. This model allows the vehicle to interpret its surroundings in real time – recognizing objects, estimating distances, identifying drivable areas and tracking movement – all of which are crucial for safe navigation, decision-making and path planning.

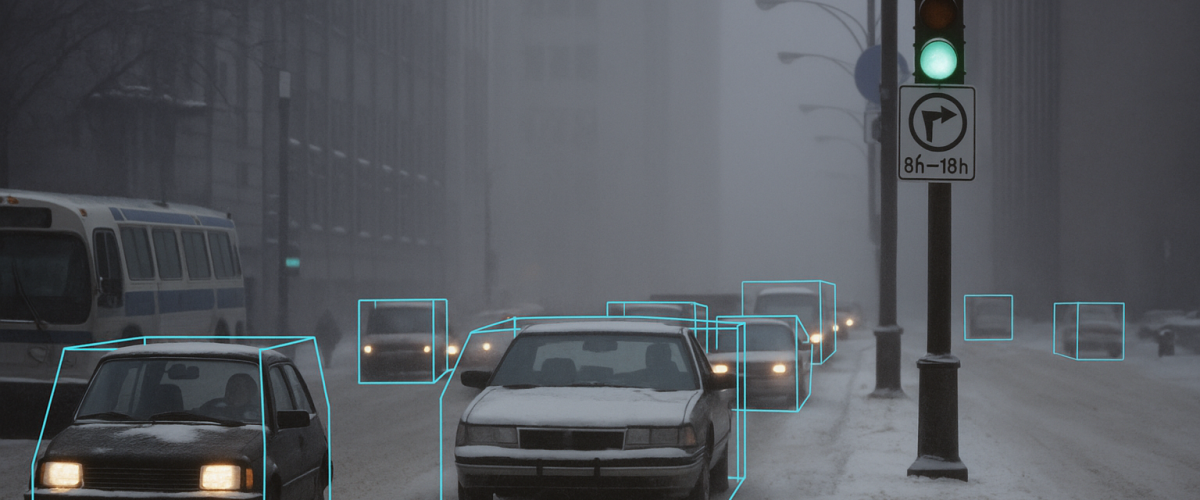

As autonomous vehicle (AV) technology advances, 3D point cloud annotation has become essential for real-time navigation and safety. In this article, we explore how 3D point cloud annotation—especially through cuboid labeling—enables safe and reliable autonomous vehicle perception systems. Learn how LiDAR, radar, and cameras contribute to high-quality data labeling pipelines.

A 3D point cloud is a collection of data points defined in three-dimensional space, where each point has coordinates (X, Y, Z) relative to a reference point – usually the center of a vehicle (0,0,0). These point clouds are most often generated using LiDAR (Light Detection and Ranging) sensors, which scan the environment with laser pulses to capture the shape and distance of surrounding objects.

Each point in the cloud can contain additional information, such as intensity (surface reflectance), color (when combined with camera data) or a timestamp (used for time alignment between sensors). The result is a dense and detailed spatial representation of the physical world.

In autonomous vehicles, point clouds play a fundamental role in perceiving the 3D environment, enabling systems to detect structures, measure distances and understand the vehicle’s surroundings in a way that 2D images alone cannot.

Before annotation begins, 3D point cloud data must be properly prepared to ensure accurate and efficient labeling. While modern annotation tools handle a range of raw input data, well-prepared data significantly reduces noise, ambiguity and human error – ultimately improving model performance. The most critical preparation tasks include:

These steps are essential for creating high-quality datasets that feed reliable detection, tracking and prediction models for autonomous driving systems.

To make sense of the spatial information captured in point clouds, autonomous vehicle (AV) systems rely on AI algorithms trained to detect, classify, and track objects in 3D space. These models require labeled data — a foundational element for supervised learning. The process of labeling objects such as vehicles, pedestrians, cyclists, buildings, or traffic signs is known as annotation.

One of the most widely used and interpretable methods for annotating 3D spatial data is cuboid annotation.

A cuboid is a 3D bounding box that encloses an object and is defined by:

Cuboids serve as a computationally efficient and semantically rich representation of objects in 3D scenes. Similar to 2D bounding boxes in image data, they provide enough structure for AI models to:

While less detailed than mesh or voxel-based representations, cuboids offer an ideal balance between annotation effort, computational complexity, and accuracy, making them suitable for most AV perception and planning tasks.

Despite its advantages, cuboid annotation in 3D point clouds poses several challenges due to the unstructured and sparse nature of the data:

To address these issues, advanced annotation tools support features such as:

By combining visual context and interactive tooling, annotators can significantly improve both efficiency and labeling quality, even in complex or cluttered environments.

Cuboid-annotated 3D point clouds are a cornerstone of autonomous vehicle (AV) perception systems, enabling the vehicle to understand and interact with its environment in real time. These annotations serve as critical input for various subsystems, including detection, planning, navigation, and simulation. Key applications include:

We use cookies to improve your experience on our site. By using our site, you consent to cookies.

Manage your cookie preferences below:

Essential cookies enable basic functions and are necessary for the proper function of the website.

These cookies are needed for adding comments on this website.

Statistics cookies collect information anonymously. This information helps us understand how visitors use our website.

Google Analytics is a powerful tool that tracks and analyzes website traffic for informed marketing decisions.

Service URL: policies.google.com (opens in a new window)

Clarity is a web analytics service that tracks and reports website traffic.

Service URL: clarity.microsoft.com (opens in a new window)

You can find more information in our Home and .